When we launched the Perle Labs Beta, the goal was simple: create a space where humans could directly contribute to the next generation of AI through high-quality judgment.

Over the past few months, that idea grew into an active contributor ecosystem, with thousands of contributors completing real tasks and helping shape the first working version of Perle Labs. That level of participation translated into sustained activity and consistent engagement.

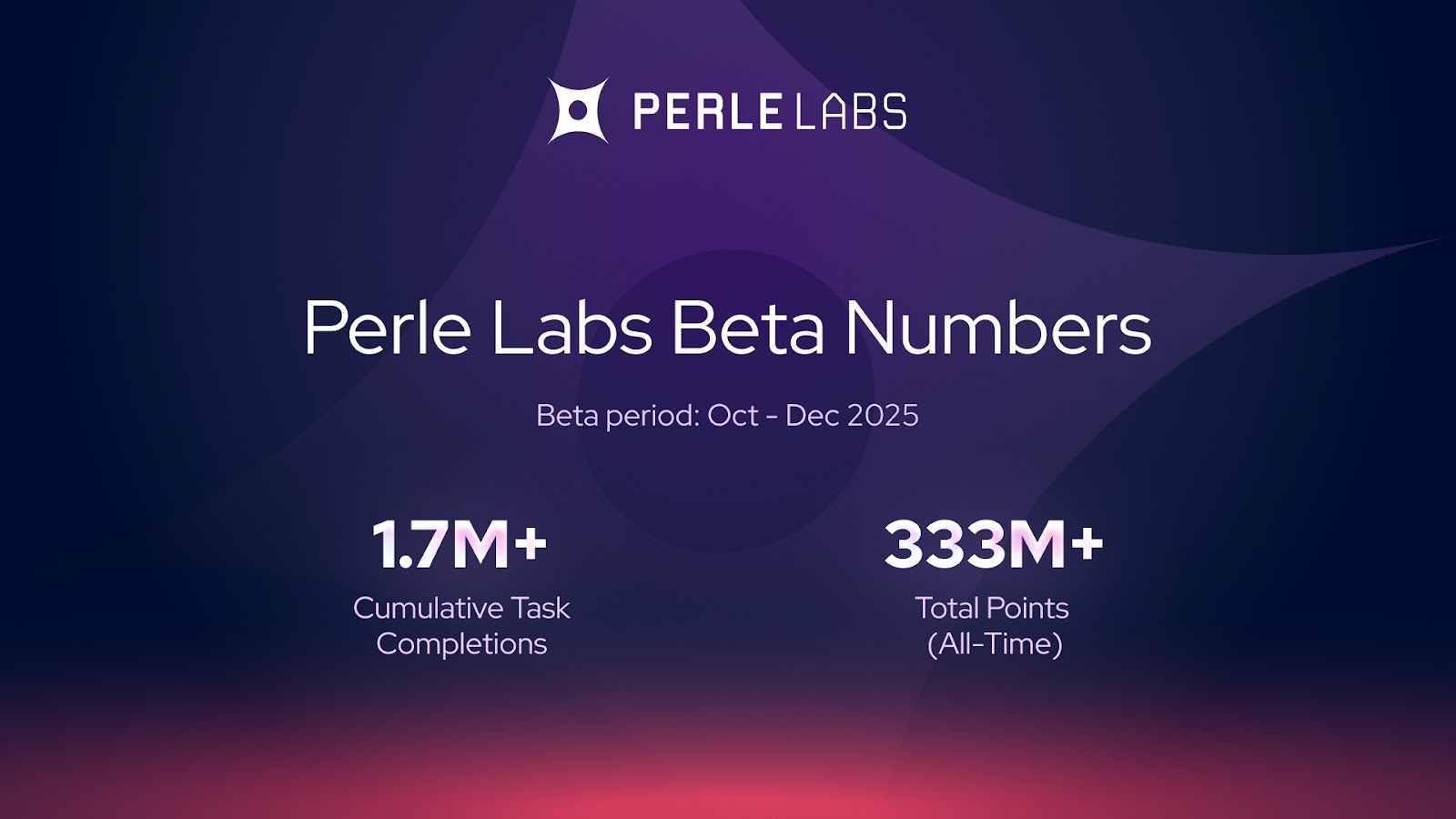

The numbers speak for themselves:

Contributors collectively completed more than 1.7M tasks, accounting for 333M+ points across the platform. These metrics reflect the scale and consistency of participation throughout the beta.

Why We Started the Beta

Modern AI systems depend on high-fidelity, high-context data. Generic labeling pipelines and synthetic shortcuts fall short where expertise, nuance, and accountability matter.

Perle Labs was built to address this by combining human judgment, transparent validation, and onchain provenance into a single contributor-driven system. The beta was our first opportunity to test that model in practice.

Through structured tasks, contributors surfaced edge cases, refined workflows, and helped validate the quality standards behind Perle Labs. The beta confirmed that a decentralized contributor network, supported by reputation and quality controls, can consistently produce high-quality data.

This became the foundation for everything ahead.

Bringing the First Version to Life

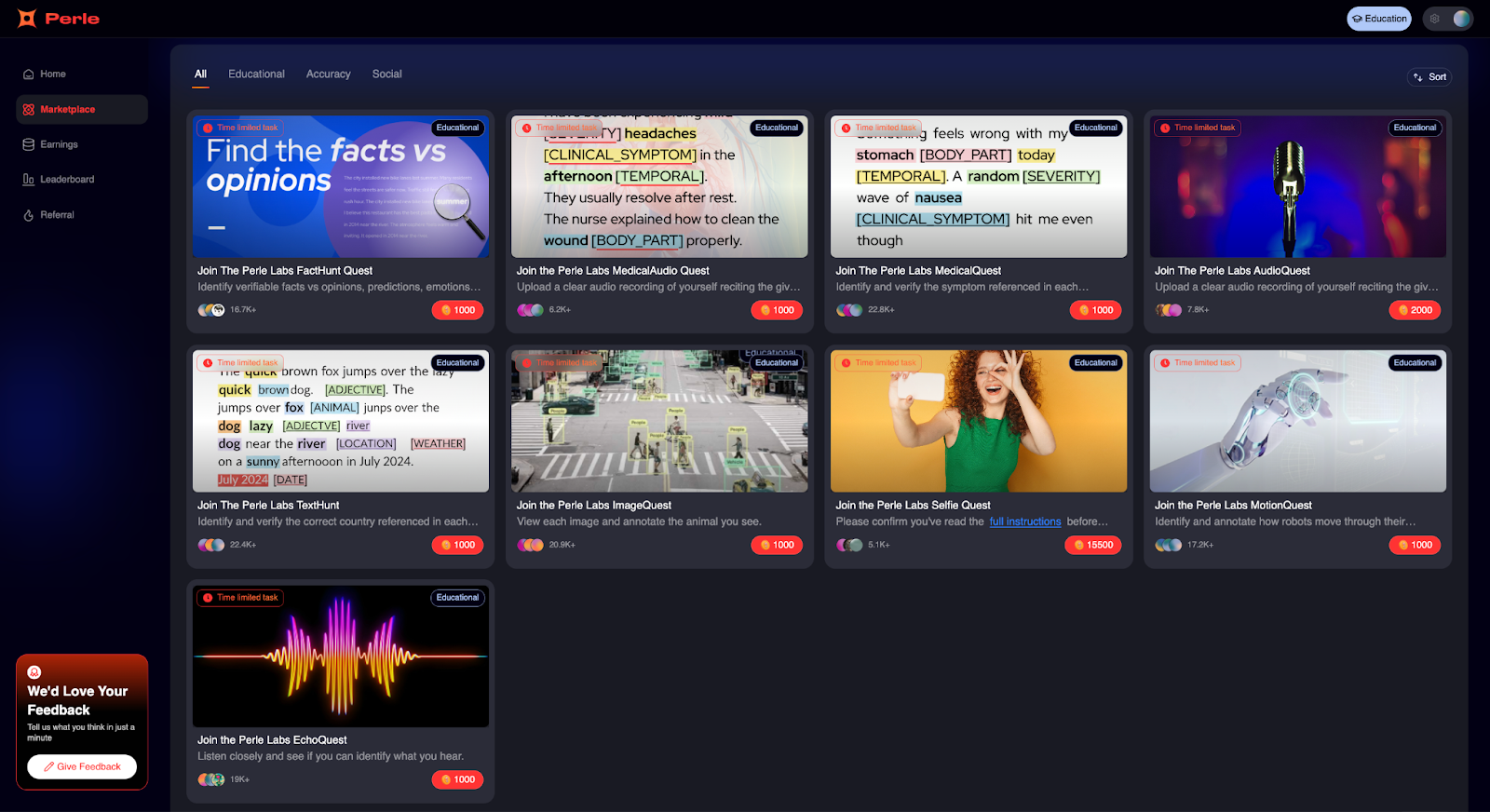

Throughout the beta, we built and refined the core systems that will power Perle Labs as it expands. Contributors completed nine structured tasks across multiple modalities, giving us clear signal on accuracy, task complexity, and contributor learning curves.

Task categories included:

- Text-based tasks, focused on language understanding and reasoning

- Audio-based tasks, covering voice, speech, and everyday sound identification

- Image-based tasks, involving visual recognition, object tagging, and real-world capture

- Motion-based tasks, centered on annotating robotic movement

Together, these tasks helped us evaluate how different data types perform in practice, where contributors excel, and how workflows scale across increasing complexity, while refining task designs that balance usability with strict quality requirements.

Quality, Validation, and Progression

The beta strengthened Perle Labs’ validation systems through clearer guidelines, improved instructions, and real-time accuracy feedback that helped contributors calibrate quality as they worked.

These improvements led to more consistent datasets and clearer contributor progression, with top contributors showing how accuracy and consistency drive performance over time.

Together, these systems form the first production-ready version of Perle Labs: functional, reliable, and built to scale.

What Comes Next

The beta laid the foundation for human-led AI at scale, pointing toward a system that goes beyond annotation and supports deeper forms of human input across the AI training process.

What the beta validated is what enables this: a contributor network capable of delivering consistent, high-quality human judgment. As Perle Labs evolves into more complex areas, contributors play an increasingly central role.

Your work laid the groundwork for what comes next, and we’re excited to bring you into the next season very soon!

.jpg)